In technology, getting an idea from inception to value involves a lot of moving pieces, which introduces risk. The concept behind DevOps enables organizations to break down the barriers to reduce that risk and get to value faster. To execute DevOps, developers can use containers for improved automation and on-demand deployment. So, in effect, containers help teams make DevOps possible.

Consider this simple scenario of a single web application with persistence:

-

- The application is developed on a developer’s workstation and must move through a series of development and testing environments before landing in production.

- There can be manual instructions for deployment on different hardware configurations, as well as multiple operating system versions and software packages with various patch levels.

- Those differences are often not discovered until after the application is deployed, because operations teams and development teams do not typically work side-by-side on an application. That’s a problem.

“All problems in Computer Science can be solved by another level of indirection.”

-Butler Lampson, 1972

Hardware Virtualization and Containers

Hardware virtualization, the creation of virtual computers and operation systems, took a step toward improving scenarios like the one above by abstracting hardware away from the operating system. However, it only virtualized a small piece of an application’s deployment and didn’t solve any of the communication concerns between development teams and operations teams. Fortunately, some operating systems already had another layer of separation built-in, called “containers.”

A container is a virtualization layer that provides a private operating system for software deployment, but has very little overhead, which results in startup time measured in seconds. Using a scripting language around this concept, a development team can decide exactly what’s needed and document those needs with an automation. That script can then be checked into source control and later executed for an on-demand deployment of everything a piece of software needs to run.

Docker is one such implementation of containers with automated deployment scripts. It was released as an open source project almost three years ago and adoption has grown significantly in that short period of time, especially in the area of DevOps practices. The original Docker engine, however, was based on Linux Container Support (LXC), so Windows users were out of luck if they needed a Windows-based software component, such as IIS or Microsoft SQL Server.

With Windows Server 2016 (now in Technical Preview 4), Microsoft has introduced Windows Server Container and Windows Hyper-V Container support. Although you can manage the containers with PowerShell, Microsoft has also opted in to the Docker ecosystem. They’ve added a fully compliant Docker engine, so you can interact with containers in the exact same way as you would for a Linux deployment.

So Let’s Put a Docker Script to Work

Now that we know what Docker for Microsoft Containers is and why DevOps advocates are championing it, let’s look at a simple Docker script that we would save as “dockerfile”.

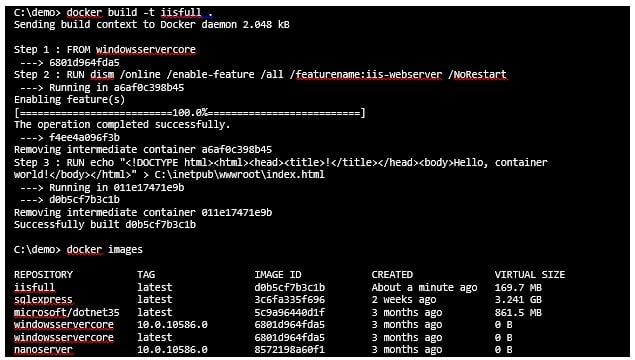

Containers use a layering approach by starting with a known base image and building on top of it to create a new image. The first line in the above script retrieves that base image. The second line installs IIS to the container. Finally, the last line creates a simple HTML file and places it as the default page for the IIS site.

Once that script is saved, we can use the Docker command line interface (CLI) to execute the script, build a new image called “iisfull”, and then list the available images on the container host.

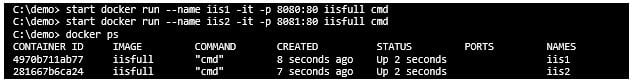

Once the image is built, it’s ready for deployment. Below, we’ve told Docker to create two containers using our new image.

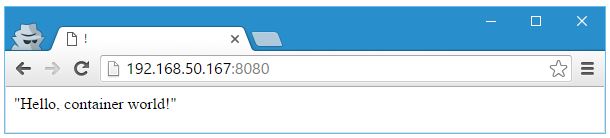

The first line will fire up a new container called “iis1” using the “iisfull” image created earlier. It will then publish port 80 of the container to port 8080 of the host and start a new interactive command terminal with the container. The second line does the same as the first, but maps port 80 of the container to port 8081 of the host. So we can now open up a browser and point to port 8080 of the container host to view the page.

How Containers and Agile DevOps will Solve Your Risk Problem

When a final image has been constructed it can be deployed to any physical environment without modification to the script. As long as the environment supports Windows containers, the software component will behave the same on a developer’s desktop, on-premise server, or cloud service.

Containers align with DevOps practices by offering amazing opportunities for automating consistent, on-demand deployments of software components, and providing a mechanism for development and operations teams to effectively communicate about runtime environments. What are your thoughts on how you could use this technology?